The microchip, which is usually tinier than a fingernail, is a crucial part of today’s digital age. It powers everything from basic gadgets to high-tech supercomputers. The journey of the microchip, from just an idea to a vital component in almost all modern tech, is a tale filled with innovation, trickery, and worldwide influence.

This article takes a deep dive into the history of the microchip, starting from its simple origins to its massive influence on the digital age. Come along as we follow the path of forward-thinking scientists and engineers who transformed a complicated idea into a reality that affects every part of our daily lives. The story of the microchip isn’t just about circuits and silicon; it’s about the limitless potential that comes from the combination of curiosity, creativity, and determination.

The Story of the Microchip: The Building Block of Today’s Electronics

Before Integrated Circuits (ICs) came into the picture, electronic devices were built using a variety of separate components. Each of these components had a specific role and was crucial to the technology of that time:

- Resistors: These were key in controlling how electricity flowed. They were used to manage the paths that electricity took in circuits. Their job of changing signal levels and splitting voltages was basic in almost all electronic devices.

- Capacitors: These parts stored electrical energy and were important in cleaning up and stabilising electric signals. Capacitors had a major role in timing and connecting applications, influencing how electronic circuits handled signals.

- Inductors: Like capacitors, inductors are used to store energy that can be used for cleaning up and stabilising signals. But, instead of storing electrical charge, they store energy through a magnetic field. Because of this, inductors were crucial in smoothing out voltages, helped make filters found in radio systems, and allowed for the creation of oscillators.

- Transformers: By changing voltage levels, transformers were crucial for the safe and efficient working of electronic devices. They allowed the transfer of electrical energy between different parts of a circuit without a direct electrical connection, often used in power supplies and audio systems.

The Crucial Function of Valves in Electronic Devices

Among these parts, valves, also known as vacuum tubes, were especially important in the historical growth of various technologies:

- Radios and Television Units: Valves were the core of early radio and television devices. They boosted audio and video signals, making it possible to broadcast and receive radio and television content. Without valves, the early growth of broadcasting technology would have been greatly slowed down.

- Audio Amplifiers: In the world of sound, valves had a key role in boosting audio signals in various devices, including public address systems and home audio equipment.

- Early Computers: Perhaps most importantly, valves were used in the first computers. Their ability to control and boost electronic signals was crucial in the computing and processing tasks of these groundbreaking machines.

This time period, marked by the use of separate components like valves, prepared the way for the next big step in electronics: the creation of the integrated circuit. The shift from these individual parts to the small, efficient world of microchips was a big milestone in the growth of electronic technology, leading to the advanced digital age we live in today.

Key Milestones in the Evolution of Microchips: A Timeline

The ‘Microchip History Timeline’ gives us a peek into the ongoing journey of creativity and technological progress. Each major event in the development of the microchip has not only changed electronics but also greatly influenced our daily lives and the digital world as a whole, reminding us that this story of progress is still being written.

1947 – Birth of the Transistor: The transistor, a key forerunner to the microchip, was invented at Bell Labs. This marked a big change from vacuum tube technology, setting the stage for making electronics smaller.

1958 – Jack Kilby’s Integrated Circuit: Jack Kilby at Texas Instruments made the first integrated circuit, a major breakthrough that set the groundwork for modern microchips.

1959 – Robert Noyce’s Practical Integrated Circuit: Robert Noyce, co-founder of Fairchild Semiconductor and later Intel, independently invented a more practical version of the integrated circuit, which made mass production possible.

1961-1965 – NASA Embraces Microchips: NASA became a key early user of microchip technology, greatly driving its development and reliability.

1971 – Arrival of the Intel 4004: The Intel 4004, the world’s first commercially available microprocessor, was introduced, changing computing by putting an entire CPU on a single chip.

1984 – The Adidas Micropacer: The launch of the Adidas Micropacer, the first shoe to use a microchip, showed the microchip’s versatility beyond traditional computing.

Late 20th Century – Fast Progress and Miniaturization: The microchip industry saw fast growth, with quick progress in making things smaller and more power efficient, leading to the spread of microchips in a wide range of devices.

21st Century – Microchips Everywhere: Microchips became common, powering everything from smartphones to critical infrastructure, and became a key part of global economics and geopolitics.

The Difficulties Faced in the Time Before Microchips

A key part of the microchip’s history is the early challenges and breakthroughs of this technology. Even with the big steps forward in early electronics, there were still big challenges that showed the need for smaller and more efficient technologies.

The Problems with Separate Components

Separate components like resistors, capacitors, and valves were easy to use and solder by hand, which made them good for hands-on building. But, this way of doing things had its downsides, including not being able to make small, portable devices. This problem with size and portability was a big limit as the demand for smaller electronics grew.

The Big Cost of Valves

Valves, which were important in boosting electronic signals, were especially difficult because they were heavy and needed a lot of power to work. This high power use was also a big downside in applications where efficiency and portability were important.

The Effect on Early Computers

The problems with using separate components were very clear in the field of computing as these machines were extremely large, often taking up whole floors of buildings. Also, these computers would use hundreds of kilowatts of power, making them expensive to run and keep up. Lastly, the frequent burnout of valves added to the maintenance challenges, as they needed regular replacements, and trying to find broken valves is no easy task.

The Start of Printed Circuit Boards

The start of printed circuit boards (PCBs) brought some benefits in organization, allowing for a more compact assembly of components. But, even with this step forward, circuits still stayed large and continued to need manual building. Even with the start of wave soldering and other mass-production techniques, the limit introduced by large separate components got in the way of the complexity and scalability of electronic devices.

The time before microchips, with its reliance on separate components, was a time of both innovation and big challenges. These challenges, especially in terms of size, power use, and maintenance, highlighted the need for a new way in electronics design. This need led to the development of integrated circuits, which promised to solve many of these problems, leading to a revolution in the field of electronics. The move to microchips was a response to the growing demands for smaller, more efficient, and more reliable electronic devices, marking a key moment in the growth of technology.

The Difficulty of Miniaturizing Components Before the Microchip Era

Before microchips, it was clear that separate components just couldn’t make electronic circuits small enough. But what was it about separate components that made it hard to make them smaller?

The Complicated Nature of Valves

Valves, which were crucial in early electronics for boosting signals, had a unique set of problems when it came to making them smaller:

- Hand-Made and Dependent on Electric Fields: Valves were built by hand and used electric fields and special grids. This complex construction made it very hard, if not impossible with the technology at the time, to make them smaller. Also, making valves physically smaller also changes their electrical properties.

- No Suitable Machines: During the time when valves were commonly used, there were no machines that could make miniature valves. As such machines would need precision systems powered by microchip technologies, valve technology could never get past a certain point.

- Problems with Vacuums: Valves work by keeping a vacuum, a feature that is hard to achieve on a smaller scale. Making and keeping a vacuum in tiny devices posed big technical problems, especially when considering that they are often built by hand.

The Size Constraints of Resistors and Capacitors

Resistors and capacitors, even though they’re simpler than valves, also had problems when it came to making them smaller. The way resistors and capacitors work is closely tied to their physical size, so it’s almost impossible to make these components smaller without changing how they work. For example, if you make a resistor smaller, it might keep its resistance value, but its power rating would be much lower. In the case of capacitors, making a capacitor smaller (without using new materials) will either result in a lower capacitance, or a lower operating voltage.

The fact that these basic components couldn’t be made significantly smaller was a big obstacle to making electronics more compact and efficient. This limitation was a key reason for the development of integrated circuits, which promised to get past these barriers. The move to microchip technology wasn’t just about convenience, but a necessary step to meet the growing demands for smaller, more powerful, and more reliable electronic devices. This change marked a key moment in the history of electronics, setting the stage for the amazing progress that would come in the digital age.

The Arrival of the Transistor: A Groundbreaking Change in Electronics

The arrival of the transistor was a game-changer in the world of electronics, leading the way for the small size and efficiency that we see in today’s devices. This section looks into the beginnings and impact of the transistor, a part that changed the field.

The Initial Period of Semiconductor Technology

Semiconductor technology goes back to the early 1900s, with one of the first examples being the cat whisker diode. This basic semiconductor device was used in radio circuits as a rectifier, showing the potential of semiconductors in electronics. But, for many years, the use of semiconductors was mostly limited to things like diodes, and their wider potential was not used.

The Inception of the Transistor

The world of electronics was dramatically changed by the research efforts at Bell Labs. Led by William Shockley, researchers at Bell Labs made two major groundbreaking steps forward in semiconductor technology:

-

Creation of the Point Contact Transistor (1947): The team at Bell Labs invented the point contact transistor in 1947. This invention was the first time semiconductors were used to make an active part capable of controlling current with the use of another current.

-

Advancement of the Bipolar Junction Transistor (1948): A year later, in 1948, the bipolar junction transistor was invented. This step forward refined the idea of the transistor, improving how it worked and its reliability.

The Benefits of Transistors

Even though transistors had some early problems with reliability and were hard to use, they quickly became popular for several reasons:

- Compact Form: Transistors were a lot smaller than the valves they replaced, which solved one of the big problems of components before microchips.

- Economical: The costs of making and materials for transistors were lower compared to valves, making them a cheaper choice for electronic devices.

- Energy Efficient: Transistors used a lot less power than valves, which made electronic devices more efficient and portable.

There’s no doubt that the arrival of the transistor was a key moment in the history of electronics. It marked a change from the big, power-hungry parts of the past to a new time of small, efficient, and reliable electronics. This step forward set the stage for the integrated circuits that would come later, further changing the world of electronic technology and opening up a world of possibilities in the digital age.

The Emergence of the Microchip: From Idea to Implementation

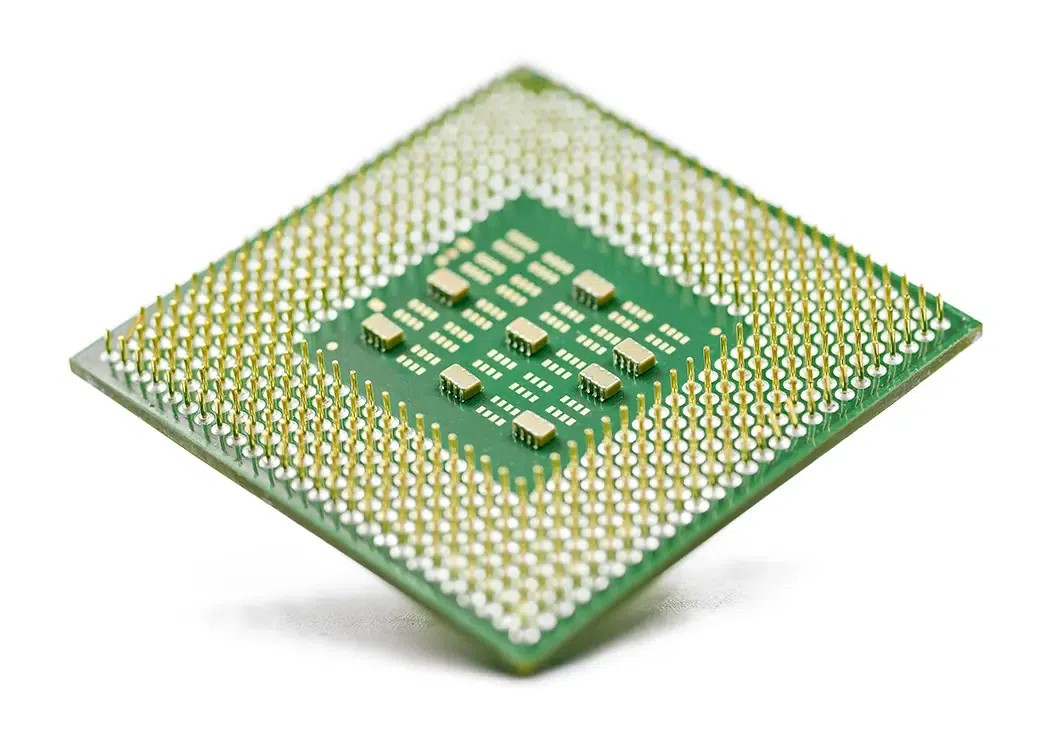

The creation of the transistor was a big step forward in electronics. But the real game-changer was the development of the microchip, also known as an integrated circuit (IC). This journey from an idea to a practical, mass-produced device is a key moment in tech history.

Initial Efforts to Combine Circuits

Initially, the unique properties of semiconductors inspired researchers to envision circuits housed on a single crystalline device. Despite the benefits of transistors, there was a clear potential for even greater miniaturisation. However, these early attempts to integrate circuits onto a single device were fraught with challenges and frequent failures.

Jack Kilby’s Pioneering Breakthrough

In 1958, a man named Jack Kilby from Texas Instruments made a big breakthrough. He developed what many consider to be the first integrated circuit. This new type of circuit was so innovative that it was even offered to the U.S. Air Force. But Kilby’s invention had its drawbacks: the circuits were not very reliable and often failed during production. They were also only cost-effective for certain uses, like defense. Plus, Kilby’s design wasn’t a true integrated circuit as we know it today, because it needed gold wires to connect the different components, which made it more complex to build.

Robert Noyce’s Single-Piece Integrated Circuit

It was only in 1959 that Robert Noyce, along with a team of engineers at Fairchild Semiconductors, came up with the invention of the monolithic integrated circuit. This invention would go on to change the industry in a big way.

- Noyce’s monolithic design brought together all semiconductor components onto a single piece, including various specially treated areas. This made the manufacturing process simpler and allowed for a whole device to be made in one go.

- In contrast to Kilby’s mixed circuits, Noyce’s monolithic integrated circuits could be made at a low cost, making them a good fit for mass production.

- The usefulness of Noyce’s design was further enhanced by the planar process, which was developed by his colleague Jean Hoerni, along with the use of lines made of aluminium on the chip itself for connections.

The Aftermath and Influence

While Jack Kilby is recognized for coming up with the idea of the integrated circuit, it was Robert Noyce who made the first practical integrated circuit that forms the basis of today’s microchip technology. This highlights the teamwork and step-by-step nature of tech innovation, where initial ideas are polished into solutions that can be sold in the market.

The arrival of the microchip started a revolution in electronics, leading to the creation of electronic devices that were smaller, more efficient, and more powerful. This breakthrough was a main factor in the tech boom of the late 20th century, paving the way for the digital age that characterizes our current time.

The Initial Difficulties in Microchip Technology

The birth of the microchip was a monumental step forward in electronics, but the early stages of this technology faced significant historical obstacles. These initial challenges played a key role in shaping the development and eventual widespread use of microchips.

Problems with First-Generation Microchips

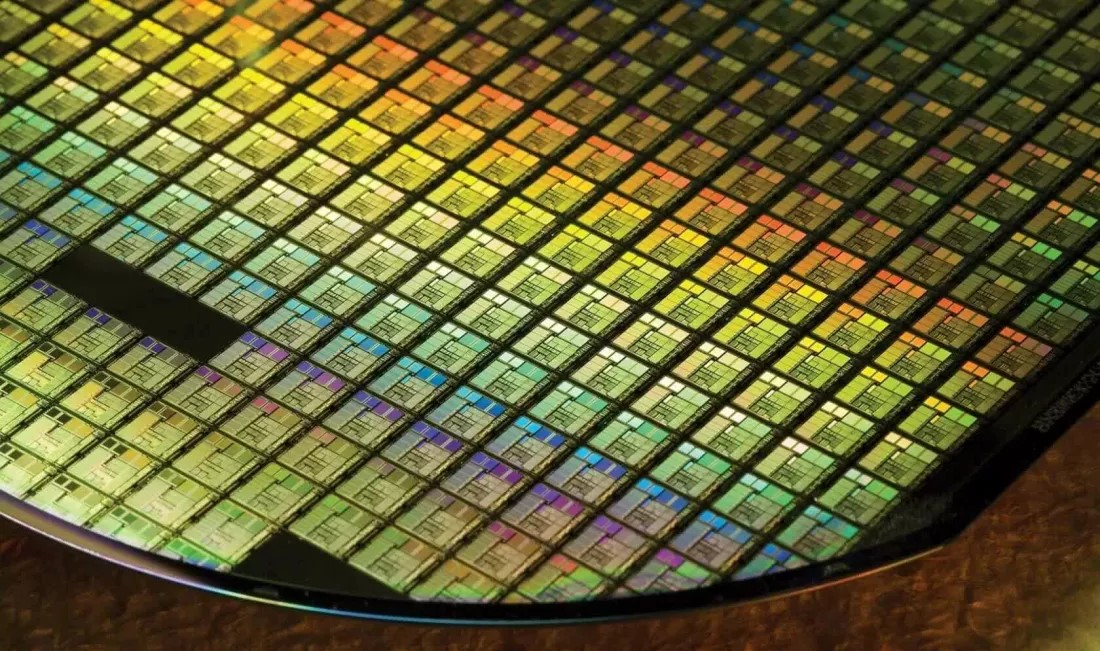

The first microchips, despite their revolutionary potential, were troubled by serious reliability issues. Due to the many impurities in crystalline wafers and the difficulties in keeping cleanrooms, wafer yields were often low, and those that did work were not entirely reliable.

High Costs and Production Challenges

Another major hurdle was the cost. Early ICs were very costly to produce, mainly due to the novelty of the technology and the difficulties faced in their production. Moreover, due to low yields, a large number of produced chips were not functional, which in turn increased costs. This high cost made it difficult to introduce microchips into consumer markets, where profit margins are typically very thin.

NASA: An Important Early Adopter

Despite the many challenges faced by early microchips, one customer, NASA, saw the many benefits they offered in their ability to produce complex designs at a reduced size and weight. Between 1961 and 1965, NASA emerged as the single largest consumer of integrated circuits due to the space agency’s need for compact, lightweight, and reliable electronics. This demand made NASA an ideal early adopter of microchip technology, despite its high cost and reliability issues (which it could easily fund). NASA’s investment in these early microchips played a significant role in advancing the technology.

Advancements in Microchip Fabrication

Over time, the combination of government agencies funding commercial interests and advances in microchip fabrication technologies began to address these challenges. Improvements in the manufacturing process led to increased wafer yields, which in turn made microchips cheaper and more reliable. As the production process became more refined and efficient, the cost of microchips began to decrease.

The Breakthrough in the Commercial

Market Once microchips became more feasible for the commercial market, the demand for them soared. The combination of reduced costs, improved reliability, and the inherent advantages of microchips over previous technologies led to a rapid increase in their use. This shift marked the start of a new era in electronics, with microchips becoming the foundational technology for a wide range of electronic devices and systems.

The Expansion of the Microchip Field

The successful creation and steady improvement of microchip technology led to a big growth in the electronics industry. This time saw the birth of many companies and the use of microchips in consumer devices, starting a new age in electronics.

Rise of Important Companies in the Industry

During this time of change, several companies that would become big names in the electronics industry started to appear. Texas Instruments (TI), Fairchild Semiconductor, and Intel were just a few of the many companies leading the way in microchip technology. These companies played a key role in the making, production, and spread of integrated circuits, pushing innovation and competition in the industry.

Microchips in Consumer Electronics

The first microchips to be used in consumer devices were relatively simple but necessary parts. These included:

- Timer ICs: Basic timer integrated circuits, like the 555 timer, became a key part in many electronic applications because of their flexibility and dependability.

- Logic Gates, Drivers, and Amplifiers: These parts were the foundation of more complicated electronic devices, allowing for a variety of functions from processing signals to managing power.

The 7400 and 4000 Device Series

During this time, two particularly well-known families of integrated circuits emerged – the 7400 series (TTL logic gates) and the 4000 series (CMOS logic gates). These device families played a key role in the progress of electronics:

- Creation of Complex Devices: When these ICs were used in combination, they could create complex devices that would have been extremely complicated with separate components.

- Shrinking Computers: These first-generation ICs had a crucial role in shrinking the size of computers from taking up an entire floor to fitting within a single room.

The Ongoing Pursuit of Miniaturization

Despite these advancements, the push for further miniaturization continued. While integrated circuits greatly reduced the size and complexity of electronic devices, there was still a need for even smaller and more efficient components. This continuous pursuit of miniaturization and efficiency kept driving innovation in the industry, leading to the creation of more advanced microchips and eventually leading to the emergence of microprocessors and complex digital systems.

The Arrival of the Microprocessor: A Significant Step in Computing

The Challenges of Early Logic-Based Computers

While integrated circuits made it possible to build more powerful computers compared to those using individual transistors and valves, these early computers were still bulky and complicated to construct. Their complexity was mainly due to the large number of separate components needed, which created issues with space, power usage, and production.

The Busicom Project and Intel’s Breakthrough

The path to the first microprocessor started with a project by Busicom Corp, a Japanese calculator company. In 1969, Busicom asked Intel to design and make a set of seven chips for a new calculator. However, Intel’s project leader Federico Faggin and Marcian Hoff saw a chance to make the design simpler. They suggested using a single CPU chip instead of several specialized ICs. Busicom agreed to this innovative idea, and importantly, Intel kept the rights to sell the chip to other customers.

The Creation of the 4004 Microprocessor

The outcome of this partnership was the Intel 4004, introduced in 1971. The 4004 was the first microprocessor in the world:

- Technical Details: The 4004 was a 4-bit CPU that incorporated 2,300 MOS transistors, marking a major leap in computing power and integration.

- Effect on Computing: The 4004 set the stage for the creation of numerous other microprocessors, like the Z80 and the 6502.

The Journey to Modern Processors

Perhaps more importantly, the 4004 started a chain of developments that would lead to the current age of computing:

- From the 4004 to the 8080: The success of the 4004 led to the creation of more advanced microprocessors, including the Intel 8080. The 8080 had improved capabilities and played a key role in the creation of early personal computers.

- The Start of x86 Architecture: The legacy of the 8080 continued with the creation of the 8086 microprocessor. The 8086 is especially important as it set the groundwork for the x86 architecture, which is still used in most personal computers today.

The introduction of the microprocessor marked a major shift in computing. It made it possible to create computers that were smaller, more powerful, and more flexible, leading the way for the revolution in personal computing. The impact of the 4004 and its successors can be seen in the wide range of digital devices that are a crucial part of modern life, showing the microprocessor’s role as one of the most important technological advancements of the 20th century.

The Present Status of Microchip Technology: A Cornerstone of Contemporary Existence

Today, microchip technology is a fundamental part of modern society, with its influence and significance touching nearly every aspect of our daily lives. This section looks at the current state of microchips, emphasizing their widespread use, technological advancements, and socio-economic effects.

Remarkable Technological Progress

Modern microchips are a testament to the peak of engineering and technological advancement:

- Billions of Transistors: Microchips today can hold billions of transistors, a huge leap from the few thousand in the earliest integrated circuits. This amazing density of transistors has been made possible by continuous improvements in semiconductor manufacturing technology.

- Shrinking Size and Increasing Power: The ongoing trend of miniaturization, known as Moore’s Law, has not only made devices smaller but also more powerful and energy-efficient. This has allowed for the creation of complex, multi-functional devices that were unthinkable just a few decades ago.

Microchips in Daily Life

The role and presence of microchips in modern life are immense:

- Everywhere in Devices: Microchips are found in a wide range of devices – from smartphones and laptops to cars, buildings, and even kitchen appliances. Their adaptability and functionality have made them essential to numerous applications.

- Running Modern Life: Beyond just being present, microchips control and enable nearly all aspects of modern life. They are the brains behind the digital and increasingly interconnected world, managing everything from communication and entertainment to critical infrastructure and transportation.

Economic and Strategic Significance

The microchip industry has become one of the most vital sectors worldwide:

- A Crucial Industry: Arguably, alongside agriculture, the microchip industry is one of the most crucial on the planet. It supports the global economy, fuels innovation, and is a key factor in the competitiveness of nations.

- Heart of Trade Wars and Geopolitics: The strategic importance of microchips has risen to the point where they are at the center of trade wars and international politics. The ability to produce advanced microchips has become a symbol of technological and economic power.

- National Security and Economic Disadvantage: For countries that can’t produce or secure a steady supply of microchips, there is a significant disadvantage. Microchips have become so vital that any disruption in their supply can have far-reaching effects on national security and economic stability.

In conclusion, the evolution of microchips from an innovative invention to a ubiquitous and critical part of modern life highlights their profound impact. As we look back on the history of the microchip, it’s clear that this small device has been a key player in the massive shift towards our digital world, economic dynamics, and global geopolitics. Their story is a tribute to human creativity and the relentless pursuit of progress.

Reference: